Introduction to Generative AI: Vision Series (3/3) - DDPM

Introduction

This is part three of a series on generative A.I. If you’d like to check out the other posts in the series, you can take a look here and here .

In this series, we’ve been dissecting some of the more popular algorithms used for image generation, such as Variational Autoencoders and Generative Adversarial Networks. This time, we’ll be tackling another very popular category of generative models - diffusion models .

Denoising Diffusion Probabilistic Models

Diffusion models, more formally known as Denoising Diffusion Probabilistic models (DDPMs), are a recent addition to the space of generative A.I. that have had massive consequences on the field. Diffusion models are the backbone behind models like Stable Diffusion and DALL-E 2.

Before DDPMs, the primary image generation algorithms were based off the VAE or the GAN. GANs specialize in creating highly realistic images but sometimes struggle to produce a wide range of images. VAEs have the opposite problem - it’s very easy to produce a wide range of images but its harder to get more realistic features with them.

Diffusion models are a sort of happy medium between the two - its possible to get highly diverse images while still preserving image quality. As such, they’re an incredibly hot topic of research right now.

Let’s see how they work!

Setup

Diffusion models are at their core, a type of latent variable model. We’ve covered these before, in my last two posts (here and here ), but this time we’re going to do something a little different.

Let’s start with some input vector

that is sampled from the true data distribution

. We’re going to define our model

in terms of

different latent variables

instead of just one. If we define

to all be the same dimension as

, we can write

We’re essentially taking an expectation of the value of the joint distribution

over another joint distribution

.

Reverse Process

We need something extra in order to compute the expression written above. A common setup in situations like this is a Markov chain

, which is a formulation such that each

depends only on

. With this in mind, we can define distributions from

to

in a way such that we can tractably compute the joint distribution

. In this setting, the joint distribution

is known as the reverse process

, and is defined as

where

and

This last definition is the core of the Markov chain, and defines the Gaussian transitions that transform an input

throughout the chain into the output

.

Diffusion Process

The opposite of the reverse process is known as the diffusion process

or forward process, written as

. This process is also fixed to a Markov chain, which gradually adds noise to the data

. We can further parameterize how much noise is added at each step using a variance schedule

. With this in mind, the diffusion process can be defined as

However, we need to define the transition

in such a way that the added noise at each step doesn’t increase the overall variance. If we add noise for

timesteps without scaling the input properly, we’ll end up with inputs in the range of

even if we started from an input of

. At any given timestep, we want

to have unit variance, so we have to manually scale the inputs

to satisfy this property.

Let’s call this scaling factor

for now. At each step, we’ll also add the noise sampled from

according to our variance schedule. By the properties of the normal distribution, we can write this noise as

where

. We can then write

Let’s assume that

has unit variance (i.e.

). By the properties of variance (refresher

if you need it), you can then write

We’re going to force

, so if we solve this for

, we’ll get

. Therefore, we know that

I won’t do a full proof by induction here, but what we did above works for every

assuming that we scale the input

to also have unit variance. From this, we can then write

Arbitrary Sampling

One extra nice thing about this formulation above is that we can derive a closed form for

for any arbitrary

. Let’s do that really quick - we’ll rewrite

in terms of

to start

The last two terms here are Gaussian distributions with mean

, which means we can merge them as follows

where we’ve reparameterized with

. Let’s define

to make this a bit easier to look at. Going back to what we had earlier, this means

We can continue this calculation all the way to

. If we also define

, we can then write

and therefore

This means that we can quickly sample from the posterior at any timestep without having to actually run through the full Markov chain, which is really nice for training.

Objective

As is the case for most latent variable models, we’d like to minimize the Kullback-Leibler divergence

between our approximate posterior

and the true posterior

. We’ve derived this before (see my first article

), and it turns out that minimizing the Kullback-Leibler divergence for this setting is the same as maximizing the ELBO

(evidence lower bound). We have that

where we want to maximize the right hand term. For the diffusion model, we can take this a little further using the definitions of our joint distributions from above, and write an objective

to minimize as

This formulation is nicer because we don’t have to compute the full joint distributions. However, there’s still an issue with this formulation - similar to the objective in the VAE, it has way too much variance to be useful as a training signal. The derivation for this next bit is super long (the original paper that introduced diffusion models goes into it in depth), but the tldr is that you can rewrite as follows

We can ignore

when training since it is constant. Therefore, we only have to ensure that

and

are tractable terms during training. The original paper defines a discrete decoder for

, but since it’s not as important for the implementation we’ll focus on

.

Loss Tractability

In my previous articles, I’ve discussed the statistical fact that the Kullback-Liebler divergence has a closed form formula, which ensures that computing it results in less variance than a Monte Carlo estimate. We already have an expression for from above, but we need to find a nice way to express for the computation of .

Let’s start with using Bayes’ Rule to rewrite and then simplify.

These terms can be expressed as Gaussian distributions using the expressions we’ve already derived above as follows.

So we can rewrite

as

where

where we’ve collected terms that don’t depend on

into

. We’ve done the regrouping of terms in this way so that the final distribution can be written as another Gaussian distribution; if we let

we can then write

and therefore we have

Objective Reparameterization

With this above expression in hand, let’s return to the original loss objective. We’ll handle separately so looking at , we have the Kullback-Leibler divergence between and .

In the original paper, the posterior variance

is fixed to

where

for simplicity. Now, because of the fact that both

and

are Gaussian distributions, we can rewrite the expression as follows

where

is a constant that does not depend on

.

The most straightforward implementation using the objective above would be to predict the forward process posterior mean

with

. However, let’s bring back the property we derived earlier for the forward diffusion process. We know that

where

, so we can rewrite

. Then, we have

Here, we notice that

must predict

given

. However, since we have

as an input for

, we can just explicitly define the model to compute this quantity using the inputs given

where we train a model

to predict the added noise at step

. With this formulation, the sampling procedure

becomes much simpler

where

. This is a very similar trick to the one used in the VAE

- we’re basically moving the noise out of the actual model and into a small random variable that we sample at inference time.

With this new parameterization, the original objective also becomes a bit simpler.

where we can also compute the

input for

as

. Both parameterizations from above are equally valid, but it’s usually more effective to train for

in practice. The authors also derived a simplified version of the above objective, where we remove the leading constant and sample

uniformly from

through

as follows

This final objective is the one most often used in practice, and it’s what we’ll use for our implementation (finally

) in the following sections.

Implementation

With the theory out of the way, we can finally get around to implementing the model. The main structure of the neural network we’ll construct for is going to be a UNet, modeled off the famous PixelCNN .

UNets are common structures in computer vision that utilize a sort of encoder-decoder like architecture to return inputs of the same size as the original. In this case, this is very useful since we’d like the model to predict the noise we added originally to get to , which will be the same size as it.

It’s also very common to include something called skip connections or residual connections in UNets, which are just essentially connections between layers where you add the output of one layer back into the input of another layer. This is helpful for improving information flow through deep neural networks, since it preserves and reintroduces older features back into deeper stages of the network.

In the original paper, the authors found it useful to make some modifications to the PixelCNN backbone that improved the overall performance for the purposes of diffusion. We’ll go through those changes one by one in the following sections, which will turn the baseline UNet into a complete NoiseNet

implementation.

Before we make any of the actual code, let's quickly implement a few helper functions.

def extract(t):

# unsqueeze Tensor to have 4 dimensions

for _ in range(4 - len(t.shape)):

t = torch.unsqueeze(t, -1)

return t

def scale_0_1(image):

# scale any Tensor to 0 to 1

image = image - torch.amin(image, dim=(2, 3), keepdim=True)

return image / torch.amax(image, dim=(2, 3), keepdim=True)

def scale_minus1_1(image):

# scale 0 to 1 Tensor to -1 to 1

return image * 2 - 1

Attention

We need to implement a Attention

layer (which is also from the attention paper) for our NoiseNet

. This block helps focus the model on relevant aspects of the input image in the noise prediction process. The authors utilized this module at lower feature depths to improve the model’s performance in conjunction with the time inputs

.

I will cover attention in more detail in a later post, so for now I’ll simply include the code implementing this module. If you’d like to learn more about attention, I found this tutorial very helpful.

class Attention(nn.Module):

def __init__(self, dim, heads=4, dim_head=32):

super().__init__()

self.scale = dim_head ** -0.5

self.heads = heads

self.dim_head = dim_head

self.hidden_dim = dim_head * heads

self.norm = nn.GroupNorm(1, dim)

self.to_qkv = nn.Conv2d(dim, self.hidden_dim * 3, 1, bias=False)

self.to_out = nn.Conv2d(self.hidden_dim, dim, 1)

def forward(self, x):

b, c, h, w = x.shape

x = self.norm(x)

qkv = self.to_qkv(x).chunk(3, dim = 1)

q, k, v = map(lambda t: t.view(b, self.heads, self.dim_head, h * w), qkv)

q = q * self.scale

sim = torch.einsum("b h c i, b h c j -> b h i j", q, k)

sim = sim - sim.amax(dim=-1, keepdim=True).detach()

attention = sim.softmax(dim=-1)

out = torch.einsum("b h i j, b h c j -> b h i c", attention, v)

out = out.permute(0, 1, 3, 2).reshape((b, self.hidden_dim, h, w))

return self.to_out(out)

Upblock, Midblock, Downblock

The backbone of the network will be composed of blocks, which we'll call Upblock, Midblock, and Downblock.

These blocks encapsulate the logic for the encoder and decoder parts of the UNetrespectively.

Since they'll implement residual connections, they'll also require specialized .forward methods.

I've included the code for each in its entirely below - we'll go into the inner details in subsequent sections.

One important thing to note is that these blocks return multiple outputs, so that we can implement skip connections in the main NoiseNet.

In particular, Upblock has a specialized residual input that we use like a stack for our residual connections.

Downblock returns these residuals, so we'll have to use some special logic in the overall NoiseNet to tie these blocks together.

class UpBlock(nn.Module):

def __init__(self, dim_in, dim_out, dim_time, attn=False, upsample=True):

super().__init__()

self.block1 = ResnetBlock(dim_out + dim_in, dim_out, dim_time)

self.block2 = ResnetBlock(dim_out + dim_in, dim_out, dim_time)

self.attn = Attention(dim_out) if attn else nn.Identity()

self.us = Upsample(dim_out, dim_in) if upsample else nn.Conv2d(dim_out, dim_in, 3, padding=1)

def forward(self, x, t, r):

x = torch.cat((x, r.pop()), dim=1)

x = self.block1(x, t)

x = torch.cat((x, r.pop()), dim=1)

x = self.block2(x, t)

x = self.attn(x)

x = self.us(x)

return x

class MidBlock(nn.Module):

def __init__(self, dim, dim_time):

super().__init__()

self.conv1 = ResnetBlock(dim, dim, dim_time)

self.attn = Attention(dim)

self.conv2 = ResnetBlock(dim, dim, dim_time)

def forward(self, x, t):

x = self.conv1(x, t)

x = self.attn(x)

x = self.conv2(x, t)

return x

class DownBlock(nn.Module):

def __init__(self, dim_in, dim_out, dim_time, attn=False, downsample=True):

super().__init__()

self.block1 = ResnetBlock(dim_in, dim_in, dim_time)

self.block2 = ResnetBlock(dim_in, dim_in, dim_time)

self.attn = Attention(dim_in) if attn else nn.Identity()

self.ds = Downsample(dim_in, dim_out) if downsample else nn.Conv2d(dim_in, dim_out, 3, padding=1)

def forward(self, x, t):

residuals = []

x = self.block1(x, t)

residuals.append(x.clone())

x = self.block2(x, t)

x = self.attn(x)

residuals.append(x.clone())

x = self.ds(x)

return x, residuals

ResNetBlock

The core block in the Upblock Midblock and Downblock

is a ResNetBlock

. This module takes in a time input as well as the image feature input and transforms it into the next stage of features. An important note here is that by implementing the block in this fashion, the DDPM allows for time inputs to be included at every stage in the diffusion process.

class ResnetBlock(nn.Module):

def __init__(self, in_channels, out_channels, time_embedding_dim):

super().__init__()

self.time_fc = nn.Linear(time_embedding_dim, in_channels)

self.conv1 = ConvBlock(in_channels, out_channels)

self.conv2 = ConvBlock(out_channels, out_channels)

self.conv_res = (

nn.Conv2d(in_channels, out_channels, 1)

if in_channels != out_channels else nn.Identity()

)

Each ResNet block also utilizes residual connections internally, which require a specialized .forward

method.

def forward(self, x, t):

t_emb = self.time_fc(F.silu(t))[:, :, None, None]

h = self.conv1(x + t_emb)

h = self.conv2(h)

r = self.conv_res(x)

return h + r

ConvBlock

All of the above blocks utilize one component - the ConvBlock.

This block simply packages a Conv2d operation with group normalization and an activiation function.

class ConvBlock(nn.Module):

def __init__(self, in_channels, out_channels, groups=4):

super().__init__()

self.norm = nn.GroupNorm(groups, out_channels)

self.conv = nn.Conv2d(in_channels, out_channels, 3, padding=1)

def forward(self, x):

x = self.conv(x)

x = self.norm(x)

return F.silu(x)

Upsample and Downsample

There’s not much theory behind these modules so I’ll keep it brief.

The Upsample

block is implemented as a normal upsampling with the addition of a convolutional layer for feature extraction.

class Upsample(nn.Module):

def __init__(self, in_channels, out_channels):

super().__init__()

self.model = nn.Sequential(

nn.Upsample(scale_factor=2, mode='nearest'),

nn.Conv2d(in_channels, out_channels, 3, padding=1)

)

def forward(self, x):

return self.model(x)

The Downsample

block is a bit more complicated. Essentially, we’re taking features from the image dimensions and moving them to the channel dimensions as a form of downsampling. Again, there’s also an added convolutional layer for feature extraction.

class Downsample(nn.Module):

def __init__(self, in_channels, out_channels):

super().__init__()

self.model = nn.Sequential(

Rearrange("b c (h p1) (w p2) -> b (c p1 p2) h w", p1=2, p2=2),

nn.Conv2d(in_channels * 4, out_channels, 1),

)

def forward(self, x):

return self.model(x)

Time Embedding

Finally, we need to implement an embedding layer for the input timestep t

. This will convert the input

from a single dimensional input into a vector that can be better used by the model at each of the stages covered above. We’ll use another concept mentioned by the attention paper

known as sinusoidal position embedding

. I won’t go into the theory in detail here (I’ll cover it in a later post on the Transformer), so for our purposes the implementation is pretty simple.

class SinusoidalPosEmb(nn.Module):

def __init__(self, dim, device, theta=10000):

super().__init__()

self.dim = dim

self.device = device

self.theta = theta

def forward(self, time):

half_dim = self.dim // 2

emb = math.log(self.theta) / (half_dim - 1)

emb = torch.exp(torch.arange(half_dim, device=self.device) * -emb)

emb = time[:, None] * emb[None, :]

emb = torch.cat((emb.sin(), emb.cos()), dim=-1)

return emb

NoiseNet

With all the above components in place, we can construct the full noise prediction network. In the code below, we create the main blocks of the NoiseNet in a UNet-like structure.

class NoiseNet(nn.Module):

def __init__(self, args, init_dim=64, dim_mults = [1, 2, 4, 8, 16], attn_resolutions = [16]):

super(NoiseNet, self).__init__()

self.args = args

self.attn_resolutions = attn_resolutions

self.input_conv = nn.Conv2d(args.channel_size, init_dim, 7, padding=3)

num_resolutions = len(dim_mults)

dims = [init_dim] + [init_dim * mult for mult in dim_mults]

resolutions = [init_dim] + [int(args.dim * r) for r in torch.cumprod(torch.ones(num_resolutions) * 0.5, dim=0).tolist()]

in_out_res = list(enumerate(zip(dims[:-1], dims[1:], resolutions)))

self.downs = nn.ModuleList([])

for i, (dim_in, dim_out, res) in in_out_res:

downsample = (i < (num_resolutions - 1))

attn = (res in attn_resolutions)

self.downs.append(

DownBlock(dim_in, dim_out, dim_time, attn, downsample)

)

dim_mid = dims[-1]

self.mid = MidBlock(dim_mid, dim_time)

self.ups = nn.ModuleList([])

for i, (dim_in, dim_out, res) in reversed((in_out_res)):

upsample = (i > 0)

attn = (res in attn_resolutions)

self.ups.append(

UpBlock(dim_in, dim_out, dim_time, attn, upsample)

)

self.output_res = ResnetBlock(init_dim * 2, init_dim, dim_time)

self.output_conv = nn.Conv2d(init_dim, args.channel_size, 1)

We’ll also create a small network to embed the time input as a vector.

dim_time = dim * 4

self.time_embedding = nn.Sequential(

SinusoidalPosEmb(dim = dim, device=device),

nn.Linear(dim, dim_time),

nn.GELU(),

nn.Linear(dim_time, dim_time)

)

Since we’re implementing residual connections in the NoiseNet, we’ll also require some specialized code in the forward()

method for the module. The way a residual connection is implemented here is to just make a copy of the input at the start of the connection and then add that to the end of the connection in forward()

.

def forward(self, x, t):

t = self.time_embedding(t)

x = self.input_conv(x)

res_stack = [x.clone()]

for down in self.downs:

x, residuals = down(x, t)

res_stack += residuals

x = self.mid(x, t)

for up in self.ups:

x = up(x, t, res_stack)

x = torch.cat((x, res_stack.pop()), dim=1)

x = self.output_res(x, t)

x = self.output_conv(x)

return x

Sampling and Noise Addition

To make things a bit easier, we’re going to wrap all these components up into one module - a DenoisingDiffusionModel.

In addition to the NoiseNet

, this module will also store the buffers for

, and all the other variables we need for the implementations of the noise addition and sampling procedures.

class DenoisingDiffusionModel(nn.Module):

def __init__(self, device):

super(DenoisingDiffusionModel, self).__init__()

self.device = device

self.noise_net = NoiseNet(dim_mults=(1, 2, 4, 8, 16))

T = torch.tensor(t).to(torch.float32)

beta = torch.linspace(b_0, b_t, t, dtype=torch.float32, device=device)

alpha = 1.0 - beta

alpha_bar = torch.cumprod(alpha, dim=0)

one_minus_alpha_bar = 1.0 - alpha_bar

sqrt_alpha = torch.sqrt(alpha)

sqrt_alpha_bar = torch.sqrt(alpha_bar)

sqrt_one_minus_alpha_bar = torch.sqrt(one_minus_alpha_bar)

self.register_buffer("T", T)

self.register_buffer("beta", beta)

self.register_buffer("alpha", alpha)

self.register_buffer("alpha_bar", alpha_bar)

self.register_buffer("one_minus_alpha_bar", one_minus_alpha_bar)

self.register_buffer("sqrt_alpha", sqrt_alpha)

self.register_buffer("sqrt_alpha_bar", sqrt_alpha_bar)

self.register_buffer("sqrt_one_minus_alpha_bar", sqrt_one_minus_alpha_bar)

We’ll also implement the noising and sampling procedures that we derived from above.

The noising procedure goes like this -

which is implemented as

def _noise_t(self, x0, t):

sqrt_alpha_bar = extract(self.sqrt_alpha_bar[t])

sqrt_one_minus_alpha_bar = extract(self.sqrt_one_minus_alpha_bar[t])

noise = torch.randn_like(x0, device=self.device)

xt = sqrt_alpha_bar * x0 + sqrt_one_minus_alpha_bar * noise

return xt, noise

The sampling procedure is a bit more complicated. Let’s first implement the sample method for a given timestep

.

def _get_sample_ts(self):

T = self.T.int().item()

ts = torch.linspace(0, T, steps=11)

ts -= torch.ones_like(ts)

ts[0] = 0.0

return torch.round(ts)

@torch.inference_mode()

def _sample_t(self, xt, t):

ts = torch.ones((len(xt), 1), dtype=torch.float32, device=self.device) * t

beta = extract(self.beta[t])

sqrt_alpha = extract(self.sqrt_alpha[t])

sqrt_one_minus_alpha_bar = extract(self.sqrt_one_minus_alpha_bar[t])

noise_pred = self.noise_net(xt, ts)

xt_prev = (xt - noise_pred * beta / sqrt_one_minus_alpha_bar) / sqrt_alpha

if t > 0:

posterior_variance = beta ** 0.5

xt_prev += posterior_variance * torch.randn_like(xt_prev, device=self.device)

return xt_prev

The sampling procedure then just involves running the sample_t

method across the timesteps in reverse order.

@torch.inference_mode()

def sample(self, shape):

images_list = []

xt = torch.randn(shape, device=self.device)

T = self.T.int().item()

sample_ts = self._get_sample_ts()

for t in tqdm(reversed(range(0, T)), position=0):

xt = self._sample_t(xt, t)

if t in sample_ts:

images_list.append(scale_0_1(xt).cpu())

return images_list

We just need one final forward method to complete the code.

def forward(self, x):

T = self.T.int().item()

ts = torch.randint(T, (x.shape[0],), device=self.device)

xt, noise = self._noise_t(x, ts)

noise_hat = self.noise_net(xt, ts)

return noise_hat, noise

And with that, we’re done with the code!

Generating Art

Now that we have the base network complete, lets put together a quick training loop and dataset to generate some new cool images!

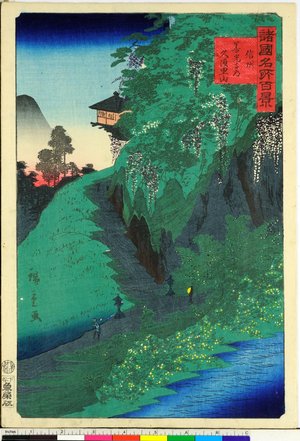

Many posts about DDPMs online use the MNIST dataset or the CelebA faces dataset; for this series, I’ll using more challenging datasets to better represent the difficulties of training these algorithms in the wild. As in my earlier post , all the experiments below use images from the Japanese Woodblock Print database .

Let’s try and generate images like the one above!

Again, I’ll skip the steps of defining the dataset and dataloader since they’re usually pretty simple and instead focus on the training loop. If you’d like to see that code, you can check out the full repo here .

Since we’ve already defined our other functions, the main training loop is also pretty simple. We just need to minimize the MSE between the actual noise and the predicted noise from our noise net over the samples in the training dataset. We’ll randomly sample timesteps throughout training - with enough batches we should reach good coverage over all from through .

ddpm = DenoisingDiffusionModel(device)

ddpm.to(device)

optimizer = optim.Adam(ddpm.parameters(), lr=lr)

losses = []

for epoch in range(n):

progress_bar = tqdm(total=len(self.dataloader))

progress_bar.set_description(f"Epoch {epoch}")

for i, batch in enumerate(self.dataloader, 0):

batch, _ = batch

batch = batch.to(device)

batch = scale_minus1_1(batch)

optimizer.zero_grad()

batch_noise_hat, batch_noise = ddpm(batch)

loss = F.mse_loss(batch_noise_hat, batch_noise)

loss.backward()

optimizer.step()

losses.append(loss.item())

#############################

#### Metrics Tracking ####

#############################

if i % 100 == 0:

print(f'[%d/%d][%d/%d]\tloss: %.4f'

% (epoch, n, i, len(self.dataloader), loss.item()))

progress_bar.update(1)

if epoch == 0:

plot_batch(scale_0_1(batch), self.progress_dir + f"train_example")

fake = ddpm.sample(batch.shape)[-1]

plot_batch(scale_0_1(fake), self.progress_dir + f"epoch:{epoch:04d}")

For training, I ran the procedure for n = 10

epochs using a constant learning rate of 2e-5

and T = 1000

. Diffusion models often take a long time to train due to the amount of data required, as well as the time taken to run the sampling procedure for intermittent results, so be ready to be patient.

Once we’ve completed training we can take a look at our generated images. As per usual, I've included images from the dataset that I feel look similar.

>

>

The images generated here are pretty decent! Even with an unclean, relatively limited dataset (roughly 200K images) in the context of diffusion models, our model is able to generate images with some surprising details. You can even see some attempts at calligraphy in the generated images above, proof that the model is capable of learning fine details from the dataset. We can see clear similarities between some of the dataset images and the generated art above, but the diffusion model still does have some way to go to get to real artwork.

Let’s also take a look at how the diffusion model improves on the same batch of latent inputs (fixed_latent

) from above throughout training.

For completeness, let’s also visualize the progressive denoising of a generated image. The code for this just involves storing the generated images every 100 timesteps for display. Click the animation again if you'd like to restart it.

Tips and Tricks

Training a diffusion model isn’t as difficult as some of the other algorithms we’ve looked at, but there are still some small, easy to implement tricks you can add to your implementation that can significantly improve the overall results.

-

Modify variance

One quick trick is to replace the posterior variance in the sampling procedure with . The authors of the paper say that both values end up producing similar results, but is more suitable for while is more suitable when is deterministically set to one point. In code, its as simple as changing

posterior_variancetoone_minus_alpha_bar_prev = self.one_minus_alpha_bar[t-1] if t >= 0 else torch.tensor(0.0) one_minus_alpha_bar = self.one_minus_alpha_bar[t] posterior_variance = beta * one_minus_alpha_bar_prev / one_minus_alpha_bar -

Modify schedules

In my implementation, I utilized a linear schedule over . In practice, some implementations utilize other schedules such as sigmoid, cosine or quadratic schedules. These schedules change what looks like over the timesteps . It’s hard to tell how this will affect the results ahead of time but it’s something to try.

Another related tip to try is a different learning rate schedule. Usually, its easiest to keep the learning rate constant over training but sometimes other schedules like the ones I mentioned above can have a positive effect on training. Again, it’s hard to tell how this will affect the results ahead of time but it may be worth testing out.

-

Clipping in sampling procedure

Another quick thing to try out would be replacing the sampling procedure with a version that uses clipping. The motivation here is that since we know should be between and , we can force the prediction to also be within this range when computing . Instead of our original sampling procedure we would compute

In code, this involves changing thesample_tmethod to@torch.inference_mode() def _sample_t(self, xt, t): ts = torch.ones((len(xt), ), dtype=torch.float32, device=self.device) * t beta = extract(self.beta[t]) sqrt_alpha = extract(self.sqrt_alpha[t]) sqrt_alpha_bar = extract(self.sqrt_alpha_bar[t]) sqrt_alpha_bar_prev = extract(self.sqrt_alpha_bar[t-1] if t >= 0 else torch.tensor(1.0)) one_minus_alpha_bar = extract(self.one_minus_alpha_bar[t]) one_minus_alpha_bar_prev = extract(self.one_minus_alpha_bar[t-1] if t >= 0 else torch.tensor(0.0)) sqrt_one_minus_alpha_bar = extract(self.sqrt_one_minus_alpha_bar[t]) x0_coeff = sqrt_alpha_bar_prev * beta / one_minus_alpha_bar xt_coeff = sqrt_alpha * one_minus_alpha_bar_prev / one_minus_alpha_bar #noise_pred = self.noise_net(xt, ts[:, None]) noise_pred = self.noise_net(xt, ts.squeeze()).sample x0_pred = (xt - noise_pred * sqrt_one_minus_alpha_bar) / sqrt_alpha_bar x0_pred = torch.clamp(x0_pred, min=-1, max=1) xt_prev = x0_coeff * x0_pred + xt_coeff * xt if t > 0: posterior_variance = beta ** 0.5 xt_prev += posterior_variance * torch.randn_like(xt, device=self.device) return xt_prev -

Clipping gradient norm

One nice thing a lot of official implementations do is clip the gradient norms before updating the model parameters. This prevents exploding gradients when you’re training the model and can help stabilize training in the long run. In PyTorch, this is very easy to add in the training loop

torch.nn.utils.clip_grad_norm_(ddpm.parameters(), 1.0)

The tricks above can be helpful, but with diffusion models the best solution is often simply more data and better data . While the math for deriving diffusion models gets quite complicated, as we have seen, the actual implementations are not difficult and the models are generally stable to train. This lends makes diffusion models well suited for simply throwing more data at if you want better results.

With this article, we’ve covered the main trifecta of vision algorithms in practice today and completed the Intro to Generative A.I.: Vision Series ! However, we’ve only really gone through the baseline algorithms in use today - we have yet to go over the many improvements and additions researchers have made upon the VAE, the GAN, and the DDPM.

With that in mind, my next article will start off something new - the Advanced Generative A.I.: Vision Series . This series will cover some more advanced models and go closer to the state of the art for image generation algorithms today. For the first article there, I’ll cover an interesting modification to the VAE (an old friend of ours ) - the vector quantized VAE, or VQ-VAE .

If you’d like to check out the full code for this series, you can visit my public repository

.