Machine Learning Fundamentals: Linear Classification (1/2)

Introduction

In my previous article, I covered supervised learning. Now, we’ll dive deeper into one of the topics covered in that article - namely, linear classification. This article is the first part of two - in this part, I’ll lay out a baseline understanding of classification problem in general as well as some useful techniques.

As from before, my goal with this series is to serve as an educational tool for those looking to review the fundamentals of machine learning with an emphasis on ease of understanding. This series assumes some familiarity with machine learning in general, and as such I will try to focus on the underlying mathematics and intuition behind the concepts that we discuss.

Linear Classification

As you may recall from last article, classification refers to a set of problems where the input is from a -dimensional set , and the output is from a set of finite categorial values, which we will refer to as . A classifier will refer to a function that outputs a label for an input sample . We will train this classifier on input data and output labels .

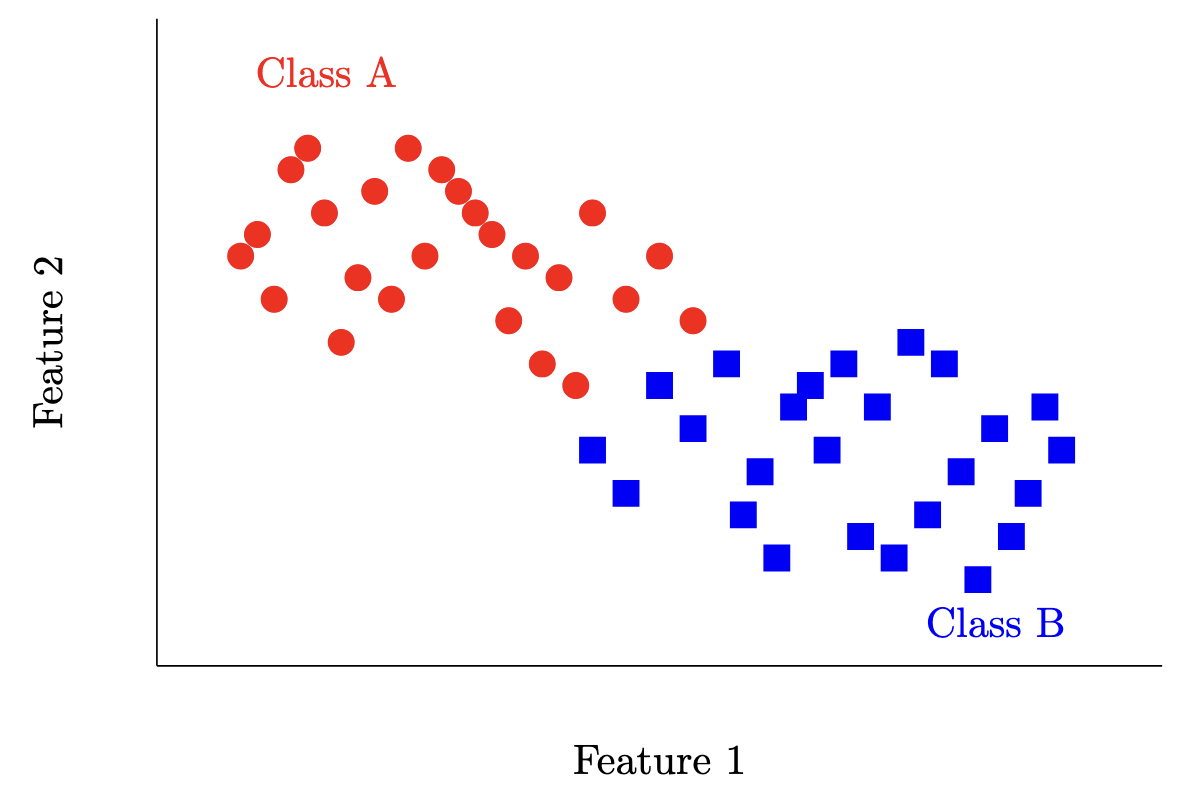

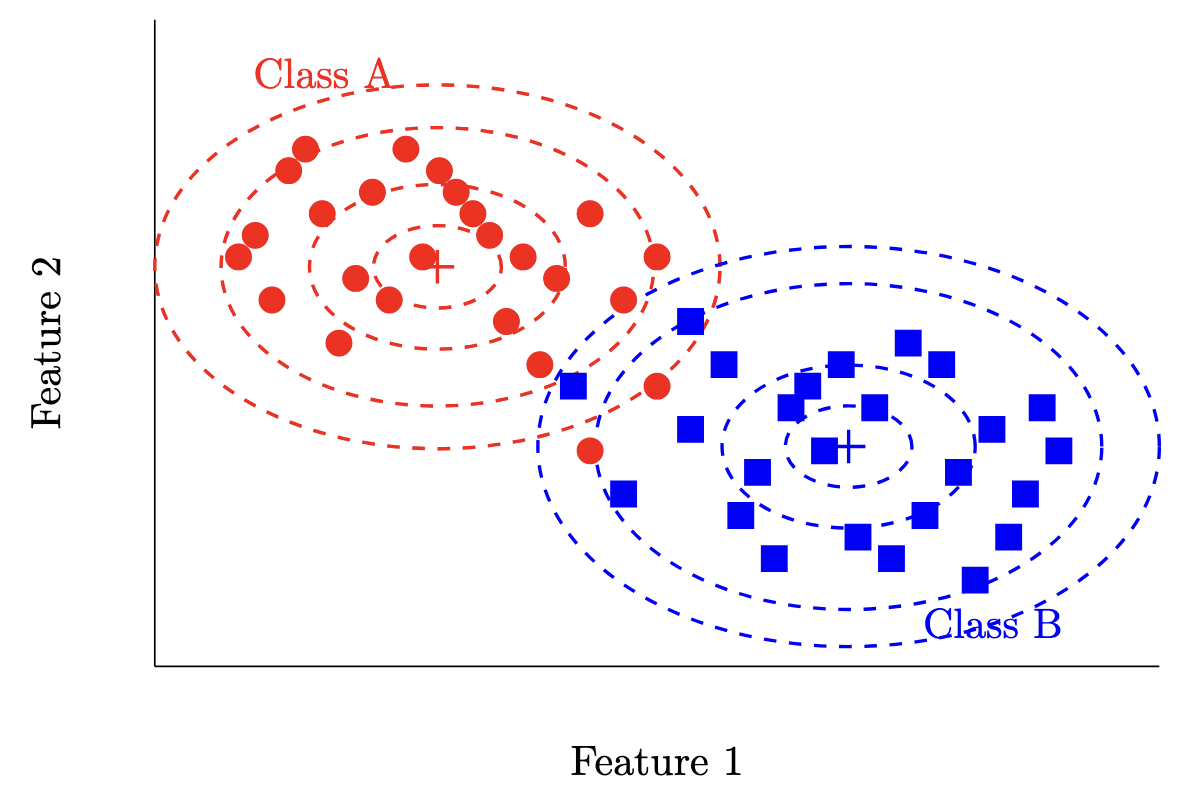

For example, for the dataset below, we’d like a function that can take in a sample point (here, ) and output a label for either Class A (red) or Class B (blue). Usually, we’ll assign numbers to classes (i.e. Class A becomes 0, and Class B becomes 1) so we can write .

There are a few types of classifiers we could naively construct off the top of our heads. For example, the constant classifier for some fixed for all inputs (i.e. in the example above classify red for everything).

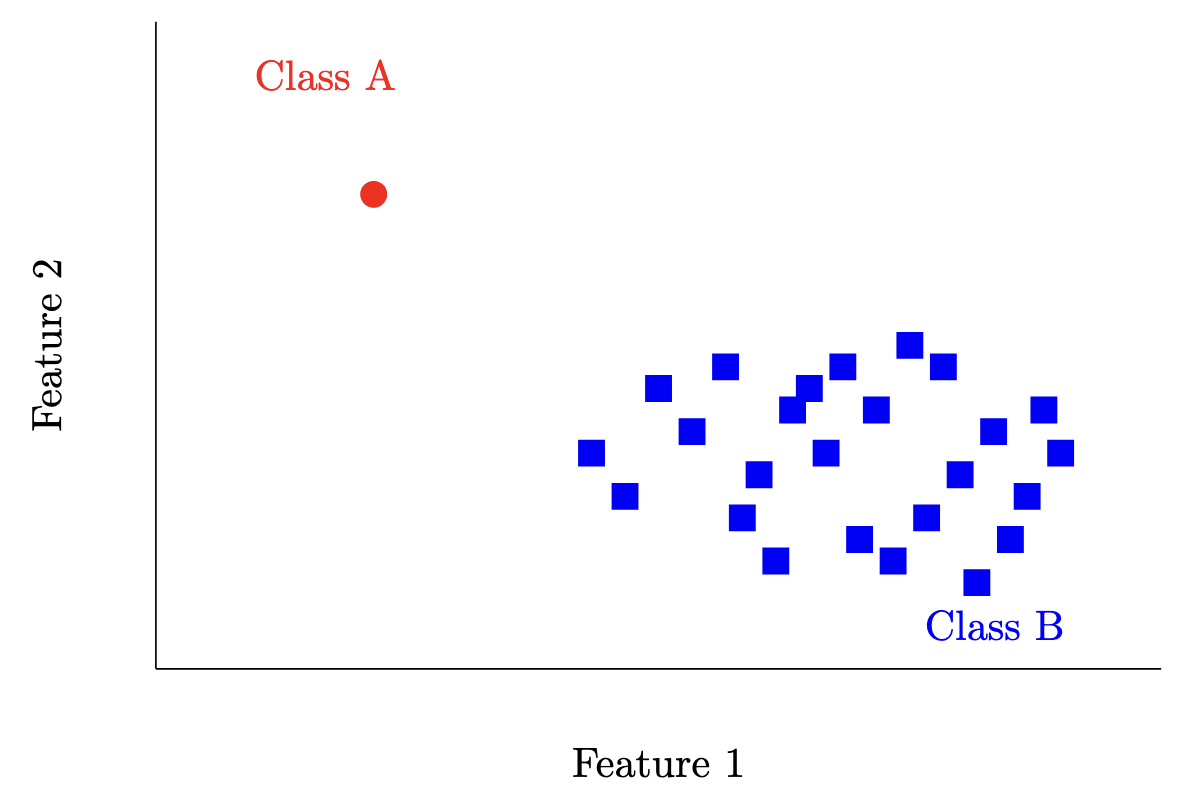

Another simple classifier would be the majority class classifier i.e. . There are definitely some datasets this could work really well for (see below), but in general we can do better.

Bayes Classifier

How much better? To answer that quantitatively, we’ll take a look at a classifier known as the Bayes classifier, which is defined rather simply:

As it turns out, this classifier is actually optimal! More formally, for an arbitrary classifier

, we can write

where

is known as the accuracy of a classifier

. Intuitively, you can just think of accuracy as the percentage of samples that a classifier labels correctly.

It’s understandable to be skeptical of this result - after all, why do we need all these fancy neural networks if the optimal classifier is this simple? Let’s first go over the proof of this result and then we can take a look at why this classifier isn’t practical.

Here, we’ll cover the proof of this result for binary i.e. the only classes are and for simplicity, although the proof does extend generally to the case of any number of categories.

Let’s consider an individual sample

. Then, for any classifier

,

Let’s let

. Then, we can write

Now, let’s use our original

and

again. We can write

Here, we can use the fact that

to simplify further.

Recall

. We now can consider three cases.

First, if , it doesn’t matter what either classifier picks since the term , so the entire expression will be .

Second, when , the Bayes classifier will pick since , which means . If the arbitrary classifier selects for , the overall expression will be , and otherwise it will be positive. Mathematically, when .

Finally, when , the Bayes classifier will pick since , which means . If the arbitrary classifier selects for , the overall expression will be , and otherwise it will still be positive, since when . Mathematically, when as well.

Therefore, this expression is always non-negative! What does this mean? Recall where we started from i.e.

. We’ve shown that this is equal to

and furthermore that this is always non-negative, meaning we can write

or in other words, the accuracy of the Bayes classifier is greater than any arbitrary classifier for a fixed point

. Our logic above didn’t depend on the particular choice of

, meaning that the same logic applies to any point

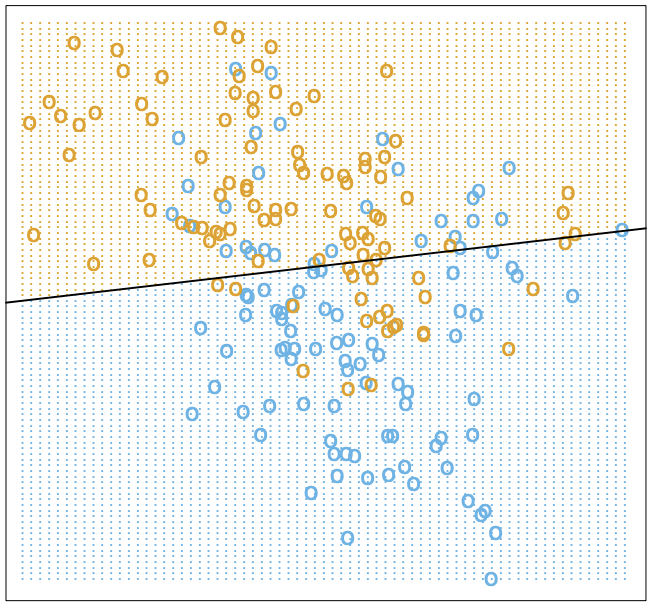

. Therefore, the Bayes classifier is optimal globally. A quick note here: optimal does not necessarily mean perfect, as shown below.

We’ve shown that the Bayes classifier cannot be beaten (as visualized above). Why then, do we need any of the items we’re about to discuss in this article? The answer lies in need for the conditional data distribution in the Bayes classifier.

Since the true data distribution is unknown, this data distribution is also unknown, meaning we need to estimate it from observed data samples. However, there can be infinite possible choices of meaning that the amount of data needed to estimate this is not practical, especially as the dimension of increases. So although the Bayes classifier is optimal, it is generally unfeasible to directly model in practice, leading us find smarter routes.

Naive Bayes Classifier

Let’s take a look at the definition of the Bayes classifier again.

One way we can simplify this is by using Bayes’ rule i.e.

Applying it here, we’ll get

In our equation, since we are taking the

over all possible values of

, and

is independent of

, we can actually remove that term entirely and write

Intuitively, this equation tells us that if we can estimate the probability that the input sample

came from the class-conditional distribution

, we can then weight this probability by the class prior (i.e.

), and choose the most likely class accordingly.

This is much more tractable than the original specification of the Bayes classifier, since we don’t need to estimate the full posterior distribution , and can instead estimate class-specific distributions and prior probabilities .

With this formulation, we have a lot of flexibility in terms of how we choose to model . If we assume that the data has some sort of form, we can choose to do some form of parametric density estimation. Essentially, we choose a type of model or distribution (i.e. linear, Gaussian, Bernoulli, etc.), and choose the parameters that are most likely to fit the given data. There are also non-parametric ways of estimating the distributions, which we may cover at another time.

One very simple way of estimating these distributions is add another assumption to our problem. If we assume that individual features are independent given the class label, we can split out the -dimensional into probabilities for each of the features. This classifier is known as the Naive Bayes Classifier.

This is a very simple model to implement in practice. However, because of the assumption of independence between features (which is generally not true), this classifier tends to result in bad estimates.

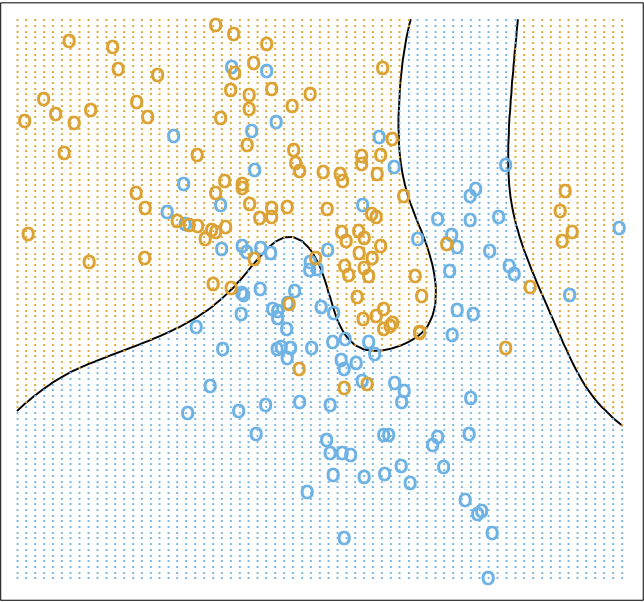

A very common choice in practice is to fit a Gaussian distribution for each class, parameterized by class specific means and variances. This is a common choice of class-conditional distribution due to the nice properties the Gaussian distribution has, especially as we increase the dimensionality of our problem. Let’s visualize what this classifier might look like for our toy example from earlier.

This classifier also allows us to measure our confidence in our prediction for each sample we classify per class, which is very useful for certain applications.

Maximum Likelihood Estimation

A reasonable question to ask at this point would be how we actually fit models like the one mentioned above to the data we have been given (as well as how any of this relates to linear classification). Rest assured, we’re slowly getting there - in this section we’ll tie all these concepts together through our discussion of maximum likelihood estimation.

Maximum likelihood estimation (MLE) is a technique used to estimate the parameters of a model to some set of data. First, let’s assume we have some data , and that each sample is independent and identically distributed (i.i.d) (this last assumption is important for later!). Then, let’s say we want to fit some class of parameterized models , where each model can be uniquely described by a set of parameters from the set of all possible parameter choices .

We define the likelihood function as follows

where the second to last step is possible because of our i.i.d. assumption from earlier. Intuitively, this function represents how probably (or likely) is the data given the model

. Maximum likelihood estimation then simply becomes which parameter setting maximizes the likelihood function, or mathematically

With this new formulation, let’s take a look at a toy example where we model our class conditional distributions with univariate Gaussians. Recall the probability density function for the Gaussian distribution

Then, our MLE problem becomes

There are two tricks we can use here to make this problem a little easier. First, since the logarithm function is strictly monotonically increasing for non-negative values, we know that

Therefore, instead of our earlier equation, we have

which is a lot nicer to analyze than the nasty product we had before.

The second trick is one you’re probably familiar with from high school calculus - namely, that finding the maximum or minimum of a function boils down to analyzing it’s stationary points, which are values when the derivative of the function is zero.

Let’s take a look at the derivative of

with respect to

and

respectively. First, let’s simplify the inner expression. We can write

Then, to find our MLE estimate of

, we have

We can discard the

term since there is no

involved. Continuing on, we have

We can then discard the constant

since we’re setting the expression to

anyways and solve

Intuitively, the best estimate of the mean parameter for the class conditional distribution is the mean of all the samples in that class in our training data - pretty simple if you think about it, but it’s nice to show it mathematically.

Now for

(phew, here we go)

We can ignore the denominator out since we want the entire expression to be

. We have

We have the MLE estimates for each class-conditional distribution now - we can just assume a Bernoulli distribution for the class priors (i.e. is just the fraction of samples with that label) and we’re done!

A small confession - the analysis I did above was for a univariate Gaussian, but in our case our data would require a multivariate Gaussian. Rest assured, the derivation ends up being essentially the same barring some complications associated with vector calculus - for the multivariate Gaussian defined as

we’d have

Implementation

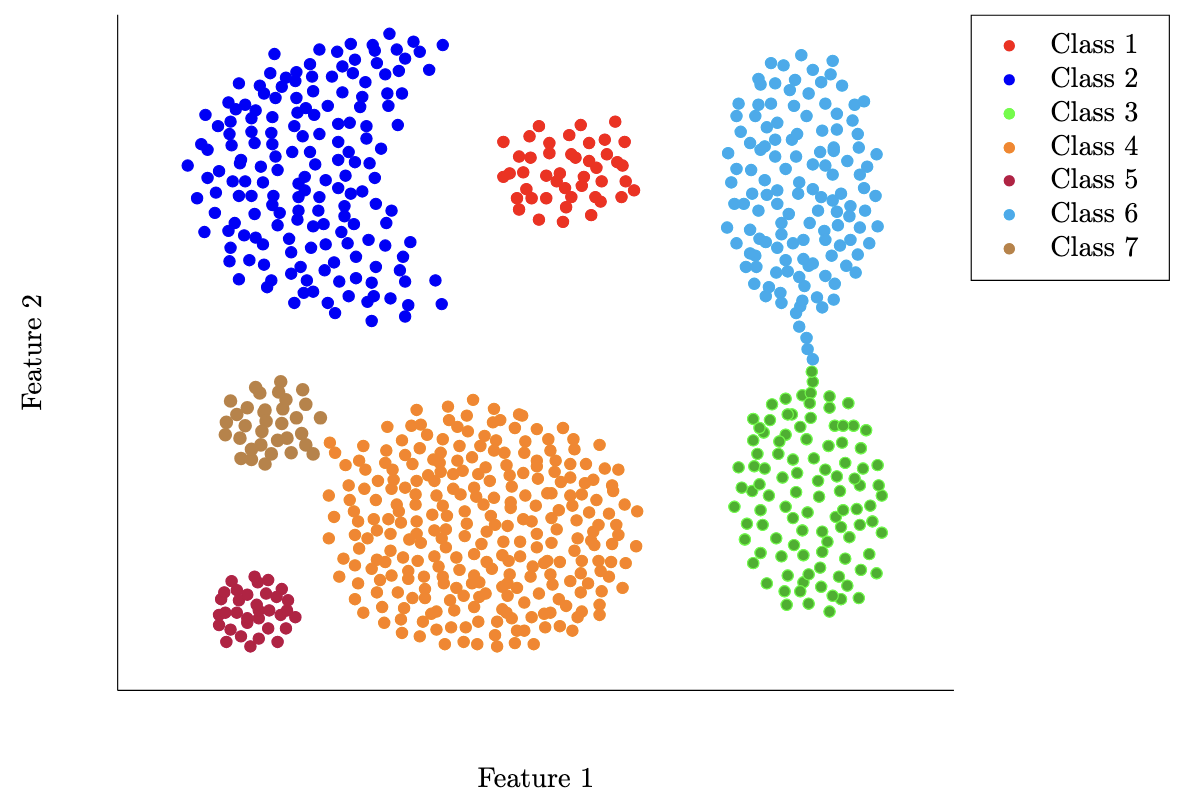

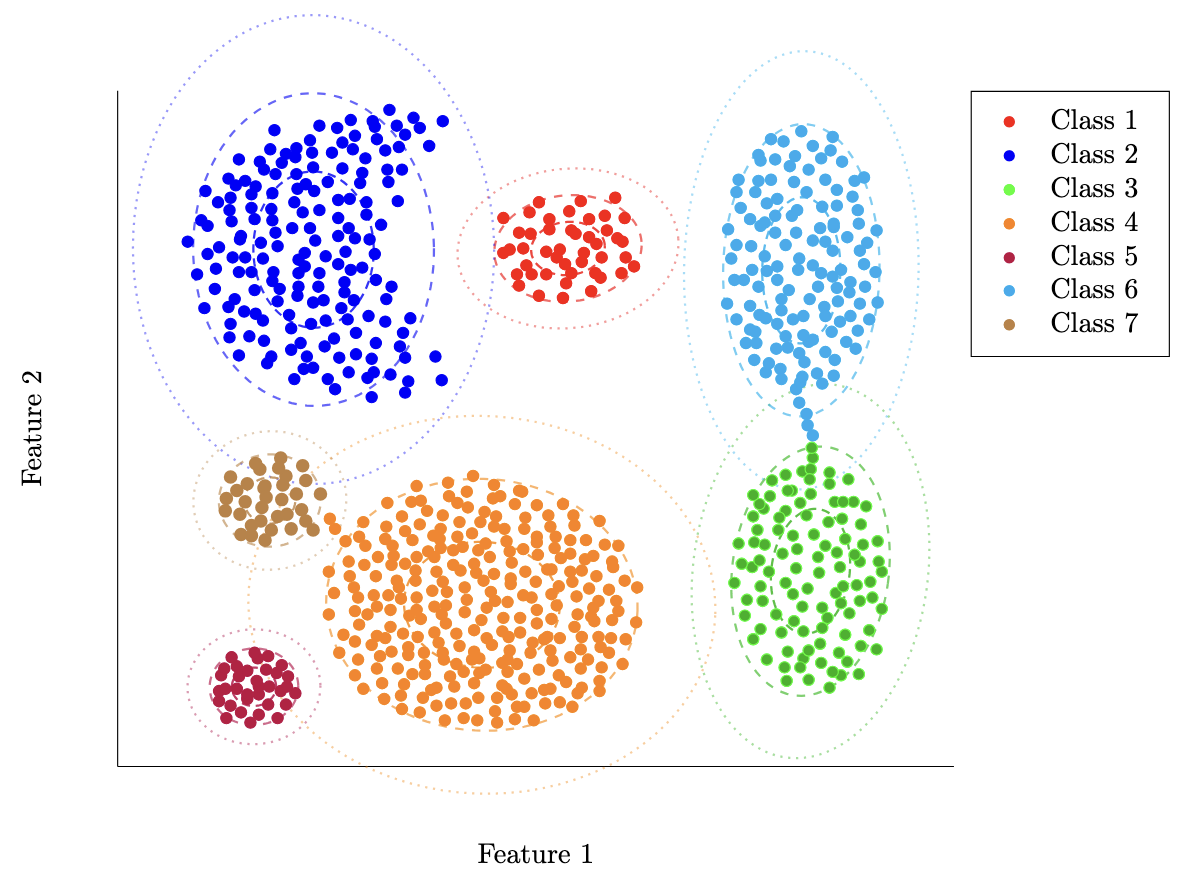

Our work has all been very theoretical up until now. For the remainder of the article, we’ll take a look at some toy examples based off this Kaggle dataset. I’ll include some small code snippets to show how you might implement these algorithms in practice. Let’s first visualize our dataset.

We can now write a function based off our derivations above to compute the class means and covariance matrices.

import pandas as pd

import numpy as np

CLASSES = np.sort(df['class'].unique())

CLASSES

def fit_gaussian_mle(df):

mle_params = {}

for c in CLASSES:

# get class data

class_data = df[df['class'] == c]

X = class_data[['feature1', 'feature2']].values

# computed for classification, but not necessary to compute class means and variance

p_y = len(class_data) / len(df)

# mle parameter estimate for class mean

mu_mle = np.mean(X, axis=0)

# mle parameter estimate for class variance

sigma_mle = np.zeros((len(X[0]), len(X[0])))

n = X.shape[0]

X_centered = X - mu_mle # (x_i - mu)

for i in range(n):

x_c_i = X_centered[i]

x_c_i = x_c_i.reshape(-1, 1) # make it a column vector

sigma_mle += x_c_i @ x_c_i.T # (x_i - mu) * (x_i - mu)^T

sigma_mle = sigma_mle / n # multiply by 1/n

mle_params[int(c)] = {

'mu': mu_mle,

'sigma': sigma_mle,

'prior': p_y

}

return mle_params

fit_gaussian_mle(df)

In my code above, I’ve refrained from using numpy functions like np.cov to be more clear with how our derivations end up being implemented in code. With the means and covariance matrices derived from this code, we can visualize our data as follows

As you can see, in addition to classification, we are also able to plot ellipses showing the relative likelihood of each class the further we get from the mean of that class. In the cases where we have overlap, we’ll rely on the feature of our original classifier to simply choose the class with the highest probability at that point.

Wrap-up

In this article, we’ve covered the basics of the classification problem, including topics such as the theory of the Bayes classifier and Maximum Likelihood Estimation. We also fit a sample dataset with MLE assuming Gaussian conditional distributions and demonstrated how you might implement this simple model in code.

If you’ve made it this far, thank for reading through as always! We’ve set up a baseline understanding of the world of classification in this article. Next time, I’ll dive more into linear classification itself, building up from the concepts we’ve covered this time.

All diagrams are made by me unless noted otherwise.