Jan. 10, 2024 | Categories: Tech

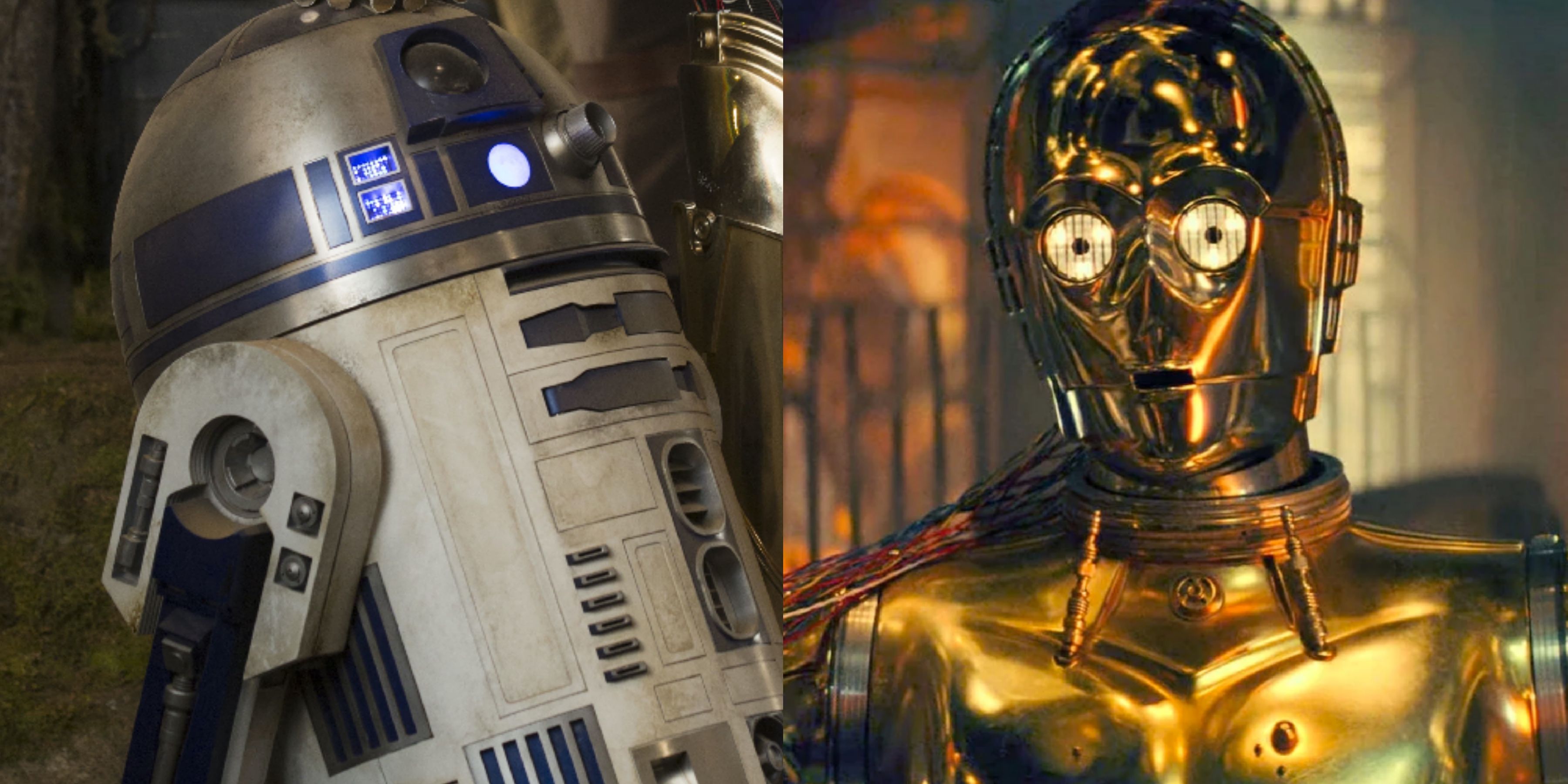

It’s time to settle the debate. Which droid is fundamentally better? Which one is truly deserving of the throne?

In the red corner, we have C3-PO, the empathetic yet ultimately useless android!

And in the blue corner, we have R2-D2, the beloved astromech droid with perhaps a little too much charm and personality!

As the Star Wars community will attest to, this debate is far from over. However, I think the question of which droid is better goes a little bit deeper than just procedural items such as which one is more relevant to the plot, or which one is cuter.

In fact, I think we can gain more insight than we realize into

human nature when we think about R2-D2 vs C3-PO. And this insight

starts with the human brain itself.

Last Friday, I meticulously picked apart and ate a full dragon roll, then later some fried chicken with soy sauce and Chick-Fil-A sauce, and then even later half a pint of blueberry cheesecake ice cream. As I was sitting there mechanically chowing down, I started wondering about the complexities of the brain and life itself, and eventually came to formulate a universal theory of quantum superposition and relativity inspired by the grandiose meal before me.

I didn’t do that, but I did wonder about why I felt like eating all that food right then and right there. What prompted my brain to signal my legs to walk the two blocks to get my food? Was it just pure chemical impulses driven by my body’s need for nutrients? Or was there something else, some other purpose to that decision?

For sure, the human brain is remarkable. It’s the most advanced biological construct we know of. But even with all our tools and research and drive, there is still so much we don’t know about the brain (like why I finished the chicken after the pint of ice cream). But the amount we don’t know about it surprisingly presents serious issues for the future of robotics and artificial intelligence.

Amy Harmon explores a similar question in her article “A Dying Young Woman’s Hope in Cryonics and a Future”. She follows the story of Kim Suozzi, a 23 year old med student, as she tried to find a way to preserve her brain with cryonics before her terminal brain cancer took its toll.

When we die, our brains collapse very quickly. To preserve the brain after death cryonically requires liquid to be pumped into the brain to fill the vessels within and stop internal collapse before the freezing process. For Kim, this meant that to freeze her brain, she would have to be decapitated shortly after her death, since it would make it quicker for the technicians to get the liquid in there.

Unfortunately, during the actual process there were some difficulties, and as a result only the outer layer of her brain ended up being preserved properly. But since the outer part of the brain is associated with abstract thinking and language, her family and her boyfriend have hope that Kim’s identity can be resurrected in the future.

But this begets an interesting question; how certain are we that the parts of Kim’s brain that were saved truly represent who she was? After all, according to Harmon herself, “the fundamental question of how the brain’s physical processes give rise to thoughts, feelings and behavior, much less how to simulate them, remains a mystery.”

Linda Rodriguez McRobbie explores this concept in her article “The Strange and Mysterious History of the Ouija Board”. At a surface level, the article discusses the history of the Ouija board and how its cult appeal demonstrates interesting things about our society. But it also grapples with concepts relating to how the mind processes information.

According to her, it’s possible that the parts of our brain responsible for our non-conscious thought processes represent parts of our identity. At the very least, it’s been shown experimentally that our non-conscious brains may contain knowledge beyond what our conscious brains are able to perceive or utilize. Essentially, “the idea that the mind has multiple levels of information processing is by no means a new one, although exactly what to call those levels remains up for debate”.

Kim was well aware of this fact. Even so, she chose to undergo her cryonization procedure, not knowing whether the person who came back would really be her or not. The family and boyfriend she left behind however, still harbor hope that scientists will be able to create technology that can accurately utilize the remaining parts of her brain to simulate her once again.

But if we don’t know what we’re really like, then how do we even begin to design technology that’s supposed to simulate what we’re like? Prominent A.I. writer Natalie Wolchover delves into this idea in her article “Our Instructions for A.I. Will Never be Specific Enough”. Her article addresses many concerns and questions about the current state of artificial intelligence, and additionally dives into the ethical implications of those concerns.

One major point Wolchover makes is “that humans often don’t know what goals to give AI systems, because we don’t know what we really want.” Clearly, our own brain functions are beyond our grasp, and yet the current state of research in artificial intelligence lies in designing systems capable of emulating our thought processes.

Furthermore, as McRobbie points out, it’s possible that we’re not even aware of certain components of our brain. How then, do we attempt to start simulating those aspects of ourselves when we’re not even aware of what is there to simulate?

Perhaps the answer to this question requires not answering it.

Let’s take a break for a moment and consider what the

best breed of dog is. Many would nominate a classic Labrador

Some others prefer a more . . . elegant archetype and so would choose something like this

And there are yet others who prefer small, energetic dogs such as these

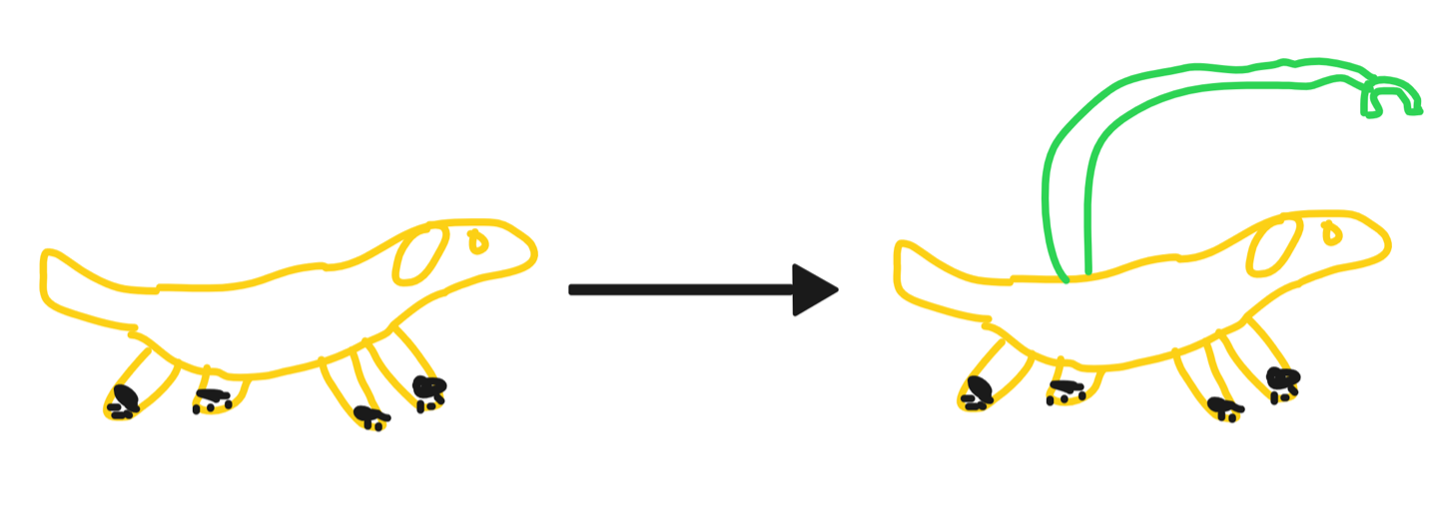

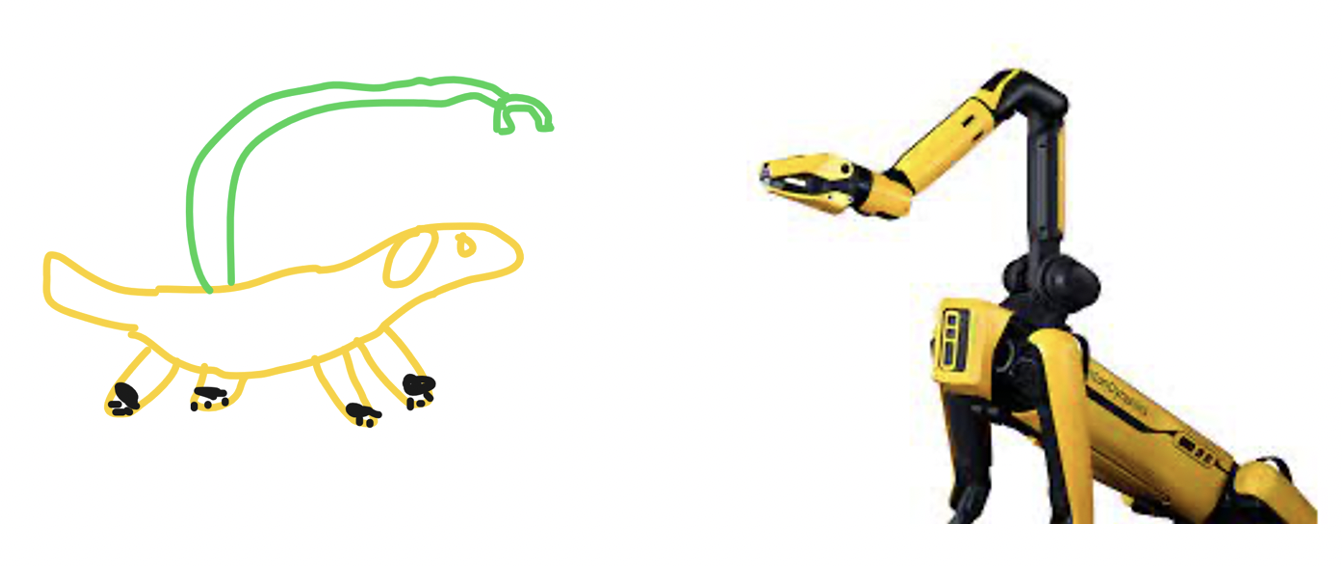

They’re all wrong. All these dogs are lacking a feature that would

absolutely revolutionize the modern world – an arm with which they

can walk themselves, open doors, etc. I have included a diagram

below for your reference.

Jokes aside, when we look around us today, we can identify all sorts of inefficiencies and issues with what has evolved naturally. Consider the Irish elk, which went extinct due to the ridiculous proportions of its antlers. Or the babirusa, a type of wild pig whose tusks grow so much that they end up impaling themselves on them.

Even humans are not perfect. The most prevalent example is the

strain placed on mothers when going through childbirth,

which even our modern medicine can’t alleviate completely.

The point is that nature isn’t perfect.

But now that humanity has entered an age of technological revolution, I think it’s pertinent to ask ourselves whether we should take our inspiration for our inventions from the imperfect models we find in nature, or if we should seek other avenues for ideation.

For example, the article “Animals Bow to their Mechanical Overlords” by Emily Anthes talks about a project by researchers seeking to create robots that could intermingle with live cockroaches. Ultimately, the researchers found that it wasn’t necessary to completely replicate the insects artificially – in fact, that would likely add additional problems. As per one researcher, “That’s good news for roboticists because it’s very difficult to copy an animal”.

Essentially, researchers aren’t trying to copy nature anymore simply due to the sheer challenge that task presents. Rather, the current state-of-the-art lies in combining aspects from nature with some artificial components.

In other words, the best inventions we have right now

are like the snake-arm dog from before; hybridizations

of the best components of everything we’ve seen and

created so far. And to show that I’m not entirely

insane, the snake-arm dog does have a robot version . . .

Clearly, the idea of hybridizing components inspired by nature in our robots isn’t revolutionary. As you can see in the Boston Dynamics video above, the state-of-the-art Spot robot was built with animals like cheetahs and dogs in mind, and additionally has an appendage inspired by more serpentine animals. The four-legged body of the robot allows it to navigate treacherous terrain easier than a human could, and its snake-like appendage allows it to grip and control objects from difficult angles in a manner that a human couldn’t replicate.

Evidently, the approach has its merits. So why haven’t we also brought this same thinking to the problem of how we design the artificial intelligence systems that run these robots?

To provide some background, current state-of-the-art systems in artificial intelligence tend to rely on something called “deep learning”. Deep learning is a subset of the field of machine learning that mainly utilizes neural networks, which are essentially crude mathematical simulations of the human brain.

This isn’t entirely accurate in practice since neural networks learn very differently from how the human brain does. In addition, they tend to be focused on very specific tasks instead of general ones like we are used to. Think “is there something that looks like a cat in this image?” compared to “what is a cat?”.

But besides that, the point here is that our current baseline for our advanced algorithm performance is our own brain. We study and improve our neural network architectures and methods in the hopes that they will eventually be able to perform similarly to our own brains.

But as we’ve seen with our most advanced physical robots, approaching the problem by simply trying to imitate nature may not be the best route. And even though we’re currently the smartest species known in the universe, it’s arrogant of us to assume that the ability to simulate our own brain will be the final stage for artificial intelligence.

Shouldn’t we try and build the best possible algorithms, and not just the best imitations of ourselves?

Of course, this is easier said than done. It’s hard to even imagine what something smarter than us would even be like, much less make it a reality. However, I do think it’s possible if we view intelligence as simply a byproduct of the biological components within our bodies.

For example, humans have relied on just their legs to travel for much of human history. Only recently have we gained the capacity to take advantage of our environment and create devices and tools capable of transporting us much faster than we could ever move ourselves.

Is intelligence fundamentally different than walking? They’re both biological processes, both rely on our genetics and environment to some degree, and both can be analyzed analytically using a variety of sensing instruments.

No matter how you answer that last question the fact remains that so far, our research into artificial intelligence has been at the very least partially limited by how we view the field. Instead of envisioning human intelligence as the end goal, we need to expand and consider all the possibilities available to us.

Promisingly enough, one of those possibilities is already being explored in remarkable detail. Specifically, the idea of focusing not on the I.Q. part of robots, but rather the E.Q. component.

Michael Corkery of the New York Times grapples with one example of “emotional robots” in his article “Should Robots Have a Face?”. He delves into many of the social qualms people are having over the idea of robots being able to emulate human emotions, including whether “robots with friendly faces and cute names help people feel good about devices that are taking over an increasing amount of human work?”.

A repeated point Corkery makes is that many people are very uncomfortable about the idea about robots being able to imitate us, even if it’s on a very superficial level. I think it’s because they feel that the sheer existence of robots capable of emotion takes away something inherently human, and that is the ability of advanced expression. For much of history humans have been the only known entity capable of advanced emotion and expression - arguably, it’s the defining feature of our society.

And yet, when Pixar gives us a robot love story that still makes us

sigh on the 10th re-watch (maybe that’s just me), we’re left to

wonder whether we’re special at all.

The simple fact is that, on occasion we do relate to robots. Think Wall-E, the Terminator Model 101, Bender, and C3-PO. But do we relate to these robots because we’re afraid they’re like us, or because we’re happy that they’re like us?

Do we want robots to be like us or do we want them to like us?

So far, the overwhelming sentiment in society is that we’re afraid of robots taking over. And given our history of dealing with things we don’t really have a complete understanding of, no one would blame people for thinking this.

Many researchers have found ways to incorporate this thinking into the frameworks we use to design our robots and artificial intelligence systems. Natalie Wolchover had a point to make about this (remember her? from part 1?) - “Instead of machines pursuing goals of their own, the new thinking goes, they should seek to satisfy human preferences; their only goal should be to learn more about what our preferences are.”

To me, that sounds a lot like creating an autonomous slave. The idea is to create a robot that’s so scared of doing something to upset us that it will constantly check and make sure the actions it’s taking are appropriate. This theoretical robot is also incapable of doing most actions without some version of human supervision, either when it’s learning how to do a task or simply executing one.

In fact, many researchers go even further to say, “giving goals to free-roaming, ‘autonomous’ robots will become riskier as they become more intelligent, because the robots will be ruthless in pursuit of their reward function and will try to stop us from switching them off” (Wolchover).

Then we must wonder; do we even want to build super intelligent robots? Clearly, the impetus for all our research and development in the field so far has been to create a useful tool for human convenience. Yet there remains a fear in society about robots making us obsolete.

I think this fear comes from an ingrained human response to losing control. Simply because of our biology and the way we evolved, humans fear things they can’t control, and that extends to things we don’t fully understand, such as death.

As a result of the society we all live in, we’ve come to view control and order as the default manner of living for humanity. That’s not to say society is inherently flawed or anything, but it does raise the question of whether we’re even capable of seeing chaos and not being in control as good anymore.

I think that the question facing us today is not about whether or not we should advance technology to the point where we have super-intelligent robots in our society. In my view, this outcome is inevitable, simply due to the nature of humanity. Rather, I think we should think more about why we're so afraid of this outcome.

Are we afraid of robots, or are we afraid of no longer being in control of the robots?

But if we’re going to think about what will happen when the robots we build develop true intelligence, then it’s equally important to think about the robots themselves when that happens.

Ethics in artificial intelligence so far has mainly focused on the ethics of how we can use artificial intelligence in a manner beneficial for us. But as we saw earlier when we talked about our approach to our approaches to designing intelligent systems, naively focusing on ourselves above all else isn’t the smartest approach.

It’s not impossible to believe that humans and robots will coexist one day. However, society in its present form likely wouldn’t be open to this kind of existence. We still view robots as tools and machines destined to be limited by humans and our comforts and intelligence.

We need to be less arrogant and more caring. Robots can

be much more than simple reflections of ourselves –

we need the courage to take the next step and transform

into a society capable of accepting our inevitable loss of control.

Now, back to our original question.

C3-PO is humanoid, but we’d consider him to act a little stiff and (fittingly) robotic at times. R2-D2 is full of personality and charm yet takes a form most closely resembling a trash can.

Which is more comfortable for us? A robot that looks like us but doesn’t act like us, or one that acts like us but doesn’t really look like us?

Should we even care about how a robot is perceived by humans? Does a robot need to be human-like to be considered intelligent or not?

These will be the most pertinent questions society has to answer

as we transition to an age of artificial life.

Well, barring the age-old: which one, R2-D2 or C3-PO?

Sources